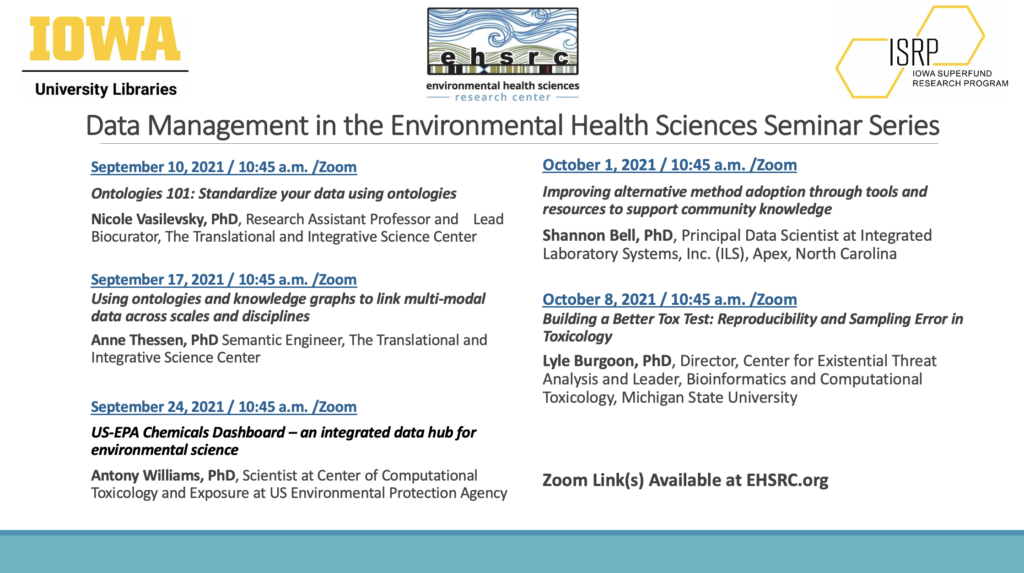

Data Management in the Environmental Health Sciences Seminar Series

September 10, 2021 / 10:45 a.m. / View presentation slides

Ontologies 101: Standardize your data using ontologies

Nicole Vasilevsky, PhD, Research Assistant Professor and Lead Biocurator, The Translational and Integrative Science Center

Ontologies are powerful tools that are used for classifying information, organizing data, and creating connections between data that allow for enhanced information retrieval, filtering, and analysis. This talk will introduce the basic concepts of ontologies, with a focus on biomedical ontologies, and provide some examples of how ontologies are used in everyday life and disease diagnostics and discuss how you can contribute to ontologies and participate in the biomedical ontology community.

September 17, 2021 / 10:45 a.m. / View presentation slides

Using ontologies and knowledge graphs to link multi-modal data across scales and disciplines

Anne Thessen, PhD Semantic Engineer, The Translational and Integrative Science Center

Biology is a very heterogeneous discipline that has benefitted from the reductionist empirical approaches that have spawned thousands of subdisciplines, from neurobiology to landscape ecology, each with its own data types and research cultures. While we have learned much by isolating and experimenting on individual components of natural systems, there is much to be gained from looking at systems more holistically, which requires data integration at an unprecedented scale. In addition, these holistic approaches require expertise and data from outside biology, such as geology, computer science, and economics to name a few. This talk will present methodologies for integrating multi-modal data across disciplines to enable large-scale, holistic studies of natural systems and discuss the team science aspects of this work in the context of the GenoPhenoEnvo and Biomedical Data Translator project.

September 24, 2021 / 10:45 a.m.

Title US-EPA Chemicals Dashboard – An integrated data hub for environmental science

Antony Williams, PhD, Scientist at Center of Computational Toxicology and Exposure at US Environmental Protection Agency

Abstract: The U.S. Environmental Protection Agency (EPA) Computational Toxicology Program utilizes computational and data-driven approaches that integrate chemistry, exposure and biological data to help characterize potential risks from chemical exposure. The National Center for Computational Toxicology (NCCT) has measured, assembled and delivered an enormous quantity and diversity of data for the environmental sciences, including high-throughput in vitro screening data, in vivo and functional use data, exposure models and chemical databases with associated properties. The CompTox Chemicals Dashboard website provides access to data associated with ~900,000 chemical substances. New data are added on an ongoing basis, including the registration of new and emerging chemicals, data extracted from the literature, chemicals studied in our labs, and data of interest to specific research projects at the EPA. Hazard and exposure data have been assembled from a large number of public databases and as a result the dashboard surfaces hundreds of thousands of data points. Other data includes experimental and predicted physicochemical property data, in vitro bioassay data and millions of chemical identifiers (names and CAS Registry Numbers) to facilitate searching. Other integrated modules include real-time physicochemical and toxicity endpoint prediction and an integrated search to PubMed. This presentation will provide an overview of the CompTox Chemicals Dashboard and how it has developed into an integrated data hub for environmental data. This abstract does not necessarily represent the views or policies of the U.S. Environmental Protection Agency.

October 1, 2021 / 10:45 a.m.

Improving alternative method adoption through tools and resources to support community knowledge

Shannon Bell, PhD, Principal Data Scientist at Integrated Laboratory Systems, Inc. (ILS), Apex, North Carolina

Over the past decade, efforts ranging from publications to workshops to science policy have been directed at moving from traditional, animal-based toxicity testing towards new approach methodologies (NAMs) that do not require animals. Key barriers hindering adoption of NAMs are knowledge gaps, both in terms of technical information deficiencies as well as lack of confidence in the methodologies. Information, comprised of data and the context within which it is used, is constantly evolving, and is typically a focal point when considering knowledge gaps. Efforts using standardized terminologies and improving data FAIRness (data should be findable, accessible, interoperable, and reusable) aid in addressing information challenges. Improving acceptance of information and methods/tools generating the data are equally important and increasing access to resources that promote approachability and transparency can support confidence building in end-users. This can be done by creating user-friendly tools that provide an access point for diverse users to explore data and techniques. These user-friendly resources build comfort and context that aids in bridging the communication gap between naive users often “traditionally” trained in in vivo approaches and subject matter experts in the NAMs. This presentation will highlight the DNTP’s Integrated Chemical Environment and other freely available web resources to illustrate ways in which knowledge gaps for NAM adoption are being addressed. Three common questions will be addressed as part of an overarching user story: How do I identify and obtain data for my compound? How can I put this data into a relevant context? How do I interpret and apply the data? Structure similarity, read-across, and in vitro to in vivo extrapolation will be discussed as a series of user-stories highlighting efforts of web-based tools to promote NAM adoption by closing knowledge gaps.

October 8, 2021 / 10:45 a.m.

Building a Better Tox Test: Reproducibility and Sampling Error in

Toxicology

Lyle Burgoon, PhD, Director, Center for Existential Threat Analysis and Leader, Bioinformatics and Computational Toxicology, Michigan State University

Most current computational toxicology predictive models require toxicology data from laboratory experiments. Although not always apparent, the predictions from these computational models can have significant ramifications. For instance, a combatant commander in the Army may require soldiers to put on MOPS gear (suits that protect soldiers from chemical exposures) during a battle based on toxicity predictions that use current environmental levels from sensors as inputs. The problem is that MOPS gear is difficult to move and fight in, thus making our soldiers easier targets. It also means that taxpayer money may be wasted on needless and unnecessary remediation of training grounds. I have noticed that the reproducibility of toxicology data that go into our computational models tend to suffer from being underpowered, exhibiting excessive sampling error, and lack the details required for reproducibility. In many cases, chemicals are being found toxic based only on p-values, which the American Statistical Association considers a very poor practice. In this talk, I will discuss and demonstrate the problems with sampling error and reproducibility that I am seeing both as a regulatory toxicologist and a military scientist. I will discuss how we can do better as a toxicology community, to improve our science, and the reproducibility of our studies. And I will demonstrate why these steps are necessary for ensuring the quality of our future computational toxicology models, and for safeguarding human health. There are real-world consequences when we fail to practice science well, and together, we can make a difference.